A Guide to SEO A/B Testing for Measurable Growth

Sections

So, you want to stop throwing SEO changes at the wall to see what sticks? Good. SEO A/B testing is how you move from guesswork to a data-backed strategy.

It’s a pretty straightforward concept: you take a group of similar pages, split them in half, and apply a change to one half (the “variant”). The other half (the “control”) stays the same. Then you watch to see what happens to your organic traffic, click-through rates, and rankings. This scientific approach finally gives us concrete proof of what actually works.

#Moving Beyond Guesswork with SEO A/B Testing

For way too long, SEO has been a mix of “best practices,” gut feelings, and copying what the competition is doing. While those things can give you ideas, they don’t give you certainty. SEO A/B testing flips that script. It’s a disciplined process that forces you to prove your ideas with real-world data from your own audience.

This is the shift that separates the pros from the amateurs. It lets you de-risk big site changes, confidently roll out winning strategies across thousands of pages, and even protect your traffic from those dreaded, unpredictable Google updates.

#Finding The Right Pages To Test

Your entire experiment hinges on picking the right group of pages to test. If you start with the wrong ones, your data will be messy, and you won’t learn a thing. The goal is to find a clean, stable environment where your changes are the only thing influencing the outcome.

The best place to start is with pages built from the same template. Think product detail pages, category pages, or blog posts. These pages are uniform, making it much easier to isolate the impact of your test. A small change here can have a massive business impact, and you can be confident that any lift you see is from your change, not just random traffic spikes.

Here’s what to look for in a good test group:

-

Enough Traffic: You need enough organic traffic to get a statistically significant result in a reasonable amount of time. Testing on pages that get 10 visits a month will take forever.

-

A Shared Template: Pages that share a design and structure (like all product pages) are perfect. This ensures you’re applying the change consistently.

-

High Business Value: Focus on pages that actually make you money. A 5% lift on a key service page is worth far more than a 20% lift on a forgotten blog post.

The secret to a clean, actionable result is isolating variables. By testing on homogenous groups of pages, you can be sure the outcome is trustworthy. This simple step is often what separates a successful test from a complete waste of time.

To get any SEO test off the ground, you need a few key pieces in place. The table below breaks down the absolute must-haves for a reliable experiment.

#Essential Components for a Successful SEO Test

| Component | Description | Why It's Critical |

|---|---|---|

| **Clear Hypothesis** | A specific statement about the change and expected outcome (e.g., "Adding FAQs will increase clicks by 10%"). | It forces you to define what success looks like *before* you start, preventing you from cherry-picking results later. |

| **Control & Variant Groups** | A set of pages to remain unchanged (control) and a similar set to receive the change (variant). | This is the foundation of A/B testing. Without a control, you can't prove your change caused the result. |

| **Sufficient Traffic** | A large enough sample of pages with consistent organic traffic to reach statistical significance. | Low traffic means the test will run for months, and the results will be unreliable and easily skewed by random fluctuations. |

| **Reliable Tracking** | Accurate tracking of key metrics like impressions, clicks, CTR, and conversions from organic search. | If you can't measure it, you can't manage it. Your data must be clean and trustworthy to make a call. |

Without these core elements, you’re not really testing; you’re just making changes and hoping for the best.

#The Lifecycle Of An SEO Test

It helps to think of SEO testing as a continuous cycle, not a one-off project. It’s a full workflow that starts with an idea and ends with a site-wide improvement, feeding insights back into the next idea. Over time, you build a powerful library of what works specifically for your website.

This process is how you can validate which strategies truly move the needle, similar to discovering proven tips & strategies to increase blog traffic through direct evidence instead of just reading about them.

This accumulated knowledge becomes a serious competitive advantage. You can see how this approach pays off by looking at successful SEO case studies where data-backed changes led to huge wins.

#How to Formulate a Powerful Test Hypothesis

Let’s get one thing straight: a successful SEO A/B testing program is built on sharp questions, not random ideas. Every test you run is only as good as the hypothesis behind it. Without one, you’re just throwing changes at the wall and hoping something sticks.

Vague goals like “improve meta descriptions” or “make titles better” aren’t hypotheses. They’re wishes. A real hypothesis is a specific, testable statement that predicts a measurable outcome. It’s the bridge between an interesting piece of data and a potential solution you want to try.

#Grounding Your Ideas in Data

Your best test ideas won’t come from a team brainstorm; they’ll surface from your data. Before you even think about what to change, you need to find a problem or an opportunity that the numbers are pointing to. This is where the real SEO detective work begins.

Start digging into your analytics and search performance tools. You’re looking for patterns, weird anomalies, and pages that just aren’t pulling their weight.

-

Google Search Console (GSC): Got pages with tons of impressions but a depressingly low click-through rate (CTR)? That’s a massive sign that your title tags or meta descriptions aren’t doing their job.

-

User Behavior Analytics: Are heatmaps from a tool like Hotjar showing that users are completely ignoring a critical section of your content? Maybe restructuring the page with an inverted pyramid style could boost engagement.

-

Keyword Research Tools: Have you found valuable long-tail keywords that your pages aren’t really optimized for? Adding a targeted FAQ section could be a quick win to capture that traffic.

Each of these data points gives you the “why” for your test. You’re not just guessing anymore; you’re responding to a clear signal from your users or Google itself.

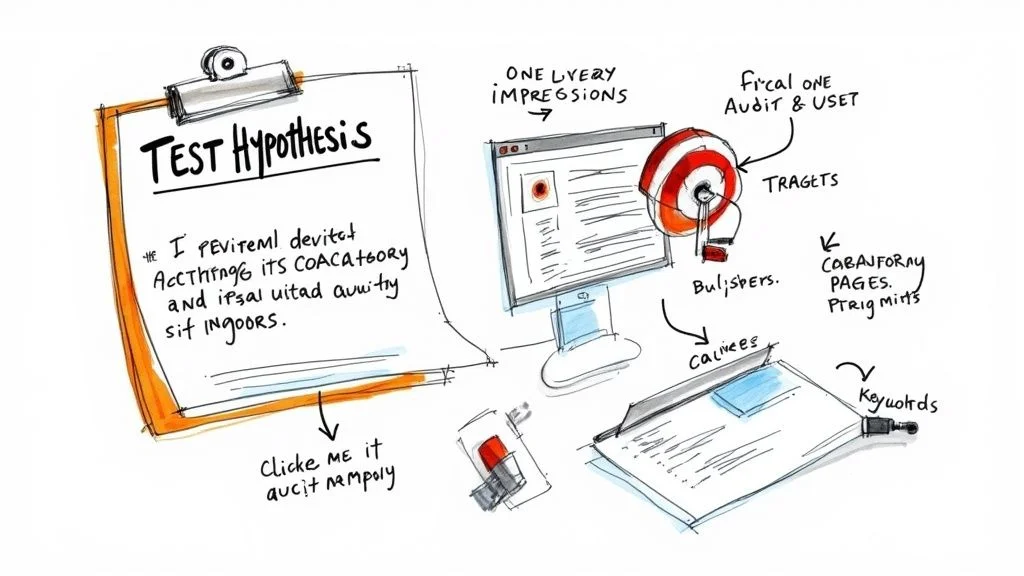

#The Anatomy of a Strong Hypothesis

Once you’ve got an observation backed by data, it’s time to frame it properly. I’ve found a classic structure that works wonders and keeps everyone focused:

If we [implement this specific change], then we will see [this measurable outcome] because [this is the reason for the change].

This simple framework forces you to be crystal clear about the action, the expected result, and the logic that connects them. It turns a fuzzy idea into a scientific premise you can actually prove or disprove.

Let’s walk through a real-world example. Say you find a group of category pages with sky-high impressions but a CTR of only 1.5%, which is way below your site average.

-

Vague Idea: “Let’s make the meta descriptions better.”

-

Strong Hypothesis: “If we add a benefit-driven call-to-action (like ‘Free Shipping on All Orders’) to the meta descriptions of our category pages, then we will increase organic CTR by 5% because it will create a more compelling snippet that stands out in the SERPs.”

See the difference? This version is specific, measurable, and tied directly to a business goal. It sets you up for a clean test where you know exactly what success looks like.

#Defining Your Success Metrics Upfront

The final piece of a solid hypothesis is deciding exactly what you will measure. You need to nail down your primary Key Performance Indicator (KPI) before the test even starts. This is your single source of truth for calling a winner.

For any SEO A/B testing experiment, the most common primary KPI is organic clicks as reported by Google Search Console. While CTR is a great diagnostic metric, total clicks represent the actual traffic gain, and that’s usually what we’re after.

Beyond your main KPI, it’s smart to track secondary metrics to get the full story. These can help you understand why a test won or lost, which is just as important.

Common Metrics to Track:

-

Primary KPI: Total organic clicks

-

Secondary KPIs:

-

Click-Through Rate (CTR)

-

Total organic impressions

-

Average ranking position

-

On-page engagement (bounce rate, time on page)

-

Goal completions or conversions

-

By setting these benchmarks before you launch, you take all the bias out of the analysis later. You’ve already defined what a “win” is. When the results roll in, the decision to roll out the change or scrap it becomes a simple, data-backed conclusion. No arguments, just data.

#Designing Your Test for Clean and Clear Results

Alright, you’ve got a solid hypothesis. Now comes the fun part: building the actual experiment. This is the technical core of SEO A/B testing, and getting it right is what separates a clear, actionable win from a confusing mess of data.

Our goal here is to create a controlled environment where the only thing that changes is the specific element you’re testing.

The gold standard for this is a split test. It’s a straightforward concept: take a large group of similar pages (think product pages, category pages, or blog posts) and randomly divide them into two buckets. One is the control group, which you leave alone. The other is the variant group, where you roll out your change.

By tracking the variant group’s performance against the control, you can isolate the impact of your change from all the other noise out there - like Google updates, seasonal traffic swings, or a competitor’s big marketing push.

#Crafting Truly Comparable Page Groups

The entire success of your split test rests on how you build these page groups. They need to be as statistically identical as possible before the test ever begins. If one group already gets more traffic or has a different seasonal trend, your results will be contaminated from day one.

Think about it. If you’re testing a new call-to-action on product pages, you can’t just throw all your best-sellers into the variant group and the duds into the control. The variant group would almost certainly “win,” but it would tell you nothing about your change.

To get this right, you have to segment your pages based on a few key characteristics:

-

Historical Traffic Volume: Make sure both groups have a similar average number of daily or weekly organic sessions over the last few months.

-

Traffic Seasonality: Group pages that follow similar traffic patterns. Don’t mix your “Christmas gift ideas” pages with your “summer vacation deals” pages.

-

Page Template: Only group pages that share the same underlying code and structure. This ensures your change is applied consistently.

This step is absolutely non-negotiable for a trustworthy result. Many of the more advanced SEO testing platforms will actually automate this for you, using historical data to create perfectly balanced groups.

#Implementing Your Changes and Technical Guardrails

With your groups defined, it’s time to apply the change to the variant pages. How you do this depends on what you’re testing. A simple title tag update can often be done right in your CMS, but tweaking your internal linking structure might need a developer’s help. For some inspiration on what to test, our on-page SEO checklist is a great place to start.

No matter how simple the change, you absolutely must put technical guardrails in place. These aren’t just suggestions; they protect your site from getting penalized by Google.

I’ve seen this go wrong too many times. An SEO test without proper canonicalization or server-side rendering isn’t just unreliable - it’s dangerous. You can easily create duplicate content issues that torpedo your rankings.

Make sure these two critical pieces are locked in:

-

Server-Side Rendering (SSR): Your change has to be rendered on the server, not in the user’s browser (client-side). This is the only way to guarantee that Googlebot sees and indexes the correct version of the page - control or variant - every time it crawls.

-

Consistent Canonical Tags: All variant pages need a self-referencing canonical tag. This is a clear signal to Google that the variant URL is the intended, “master” version of that page, which kills any potential duplicate content confusion with the control group.

#Expanding Beyond Simple A/B Tests

While a standard A/B test is incredibly powerful, it’s not your only option. The fact is, the global SEO industry is valued at 106.15 billion by 2030 because businesses are hungry for the kind of validated results that testing provides.

For more complex scenarios, you might want to delve into multivariate testing. This approach lets you test multiple variables at once - for example, a new headline and a different meta description - to see which combination delivers the biggest impact. It’s more complex to set up, but it can dramatically speed up your learning curve.

By carefully designing your test, balancing your page groups, and locking in your technical safeguards, you’re creating the perfect conditions for a clean experiment - one that delivers results you can actually trust.

#Analyzing Test Data with Statistical Confidence

Alright, your SEO test is live. Now comes the hard part: waiting. But don’t just sit on your hands. This is the measurement phase, and your main job is to let the data roll in without jumping to conclusions. The whole point is to tell the difference between a real impact and random noise, which means getting comfortable with statistical confidence.

Simply put, statistical significance is about certainty. It answers the question: is the performance difference between my control and variant pages a real result of my change, or just a fluke? The industry standard is a 95% confidence level. This means there’s only a 5% chance that what you’re seeing is pure luck.

#Mastering Test Duration and Timing

So, how long should a test run? The answer isn’t a set number of days. It depends on two things: hitting statistical significance and accounting for normal traffic cycles. I’ve seen it a hundred times: a team ends a test on a Friday after a great week, completely forgetting that search behavior can flip on its head over the weekend.

To get a real, honest picture, you have to run your test for full weekly cycles. This smooths out the daily peaks and valleys in your traffic and gives you a much more reliable average. A test that hasn’t run for at least two full weeks is rarely trustworthy. For most tests, a 14 to 28 day window is a solid baseline to gather enough data to make a call.

#Isolating Impact with Predictive Modeling

One of the toughest parts of SEO testing is attribution. How do you know for sure that your traffic lift came from your title tag change and not a surprise Google update or a seasonal trend? This is where the pros separate themselves from the amateurs, moving beyond simple comparisons and using predictive modeling.

This is all about statistical rigor. Top-tier SEO testing platforms don’t just compare Group A to Group B. They use sophisticated models that analyze about 100 days of historical traffic data for both your control and variant page groups before the test even starts. By looking at old patterns and natural fluctuations, these models can accurately predict what your traffic should have looked like without your change.

This forecast is your “what if” scenario. When you compare your actual traffic to this predicted baseline, you can confidently measure the true uplift from your experiment. It effectively filters out all the external noise.

#Interpreting GSC Data and Beyond

Google Search Console is your ground truth here. The key is to look at the whole picture, not just one metric. You need to analyze both your primary and secondary metrics to understand the full story.

Here’s a simple framework I use for analysis:

-

Primary Metric (Clicks): This is your main goal. Did the variant group get a statistically significant bump in total organic clicks?

-

Secondary Metrics (CTR & Impressions): These tell you why you won or lost. Did clicks go up because your new meta description killed it on CTR, or did you start ranking for new terms and get more impressions?

-

Negative Indicators: Always check for collateral damage. Did your change boost clicks but tank your average ranking position? Or maybe it hurt user engagement metrics like bounce rate?

A real win is a clear, positive impact on your main KPI without wrecking your secondary metrics. A 10% jump in clicks that comes with a 20% drop in conversions is not a win. It’s a trap.

This kind of holistic analysis is what leads to smart decisions. When you understand the interplay between these data points, you get insights that go way beyond a simple “win” or “loss.” It’s a core part of learning how to measure SEO effectively.

Once you have a result that’s both statistically significant and holistically positive, you’re ready to roll out your change with confidence.

#Common SEO Testing Mistakes and How to Avoid Them

Even the most well-oiled SEO A/B testing machine can sputter and stall. A single wrong move can throw your results into question, waste weeks of your time, or worse, actually damage your site’s rankings. Knowing where the landmines are is the first step to building a testing process you can actually trust.

The classic mistake I see all the time is trying to test too many things at once. It’s so tempting to bundle a new title tag, a fresh meta description, and a tweaked H1 into one big experiment. But when it’s all said and done, you’re left scratching your head. Which change actually moved the needle? You can’t pin the success or failure on any single element, which means the result isn’t actionable.

To keep your data clean, stick to one variable per experiment. If you have ideas for both titles and meta descriptions, that’s great! Just run them as two separate tests. It might feel like you’re moving slower, but it’s the only way to get unambiguous data that tells you exactly what resonates with your audience.

#Ending Your Test Prematurely

Another all-too-common blunder is pulling the plug on a test too soon. You kick off an experiment on Monday, and by Wednesday, you’re looking at a 15% lift in traffic. The excitement is real, and you’re itching to declare a winner and roll out the change. This is usually a trap, often caused by a random traffic spike or just the normal ebbs and flows of the week.

A test needs time to breathe. It has to run long enough to capture the full spectrum of user behavior, which can look very different on a Tuesday than it does on a Saturday. As a rule of thumb, always let your SEO tests run for complete weekly cycles - at least two full weeks is a solid baseline.

Don’t let a random traffic spike fool you into a false positive. Wait until you hit 95% statistical confidence, and run the test long enough to iron out daily weirdness. Patience is what separates a lucky guess from a reliable result.

This discipline ensures your decisions are built on a stable trend, not a fleeting moment of good fortune. Jump the gun, and you might implement a change that does nothing - or even hurts your performance down the road.

#Overlooking Critical Technical Guardrails

Technical screw-ups are easily the most dangerous mistakes because they can have immediate, negative consequences for your SEO. There are two areas that demand your full attention: canonicalization and cloaking. When you’re running a test, you’re creating multiple versions of a page, and that can really confuse search engines if you’re not careful.

Without the right technical setup, you could find yourself in Google’s bad books.

-

Misconfigured Canonical Tags: This is a big one. Every single variant page in your test must have a self-referencing canonical tag. This is your way of telling Google, “Hey, this URL is the official version of this page,” which stops it from being flagged as duplicate content of your control page.

-

Accidental Cloaking: Cloaking means showing one thing to users and something else to Googlebot. If your testing tool relies on client-side rendering (making changes in the user’s browser), Google might not see the test version at all. This discrepancy is a violation of its guidelines. Always opt for a server-side solution to ensure everyone sees the same thing.

I once saw a company test a new page layout but completely forget to update the canonical tag on the variant. Google found two nearly identical pages fighting for the same keywords, which diluted their ranking signals and caused both of them to tank in the SERPs. Nail these technical details from the very beginning, and you can run your SEO A/B testing program safely and gather clean data without risking your hard-earned rankings.

#Common Questions About SEO A/B Testing

When you first start with data-driven SEO, you’re bound to have questions. It’s a field with its own quirks, and a little confusion is totally normal. Let’s clear up some of the most common things people ask about SEO A/B testing.

#What’s the Real Difference Between SEO and CRO A/B Testing?

This question comes up all the time, and it’s a super important one. They’re two totally different games with different players and different rules.

Conversion Rate Optimization (CRO) testing is all about the user. You’re trying to get people who are already on your website to do something, like buy a product or fill out a form. In a CRO test, you show different versions of a page to different users at the same URL, and you measure things like conversion rates.

SEO A/B testing, however, is all about the search engine. The main goal is to get more organic traffic by making changes that Google’s algorithm likes. Instead of showing different versions to users, you split a group of similar pages into a control and a variant. We measure success here with search metrics, like clicks and impressions from Google.

#Which Tools Are Best for Running SEO Split Tests?

You could try to run a test manually, but honestly, it’s a massive headache and really easy to mess up. This is where dedicated tools come in - they do all the heavy lifting, from creating statistically sound page groups to handling the technical bits and analyzing the data.

A few of the heavy hitters in this space include:

-

SearchPilot: A true market leader, known for its powerful statistical models and features built for big, enterprise-level sites.

-

SplitSignal: This tool was born out of an agency and plays nicely with platforms like Semrush, making it a bit more accessible.

-

Statsig: A really flexible experimentation platform that can handle SEO tests by letting you randomize based on URLs.

The best one for you really depends on your budget, your team’s technical skills, and how big your testing program is. The most important thing is picking a tool that renders changes on the server-side, so you know Google sees everything.

Be careful not to use a standard CRO tool for an SEO test. Many of them use client-side JavaScript to make changes, which means Googlebot might never even see your variant. Not only does this make your test useless, but it can also look like cloaking to Google.

#How Long Should an SEO A/B Test Run?

There’s no single magic number here. The simple answer is: “long enough to be sure.” A test’s timeline is really driven by two things: reaching statistical significance and riding out normal traffic ups and downs. At a bare minimum, a test needs to run for two full weeks to even out any weirdness between weekday and weekend search behavior.

Most of our tests run for somewhere between 14 to 28 days. A classic rookie mistake is to end a test early just because you see an exciting spike in traffic. That’s a great way to roll out a change that doesn’t actually work. You have to be patient and let the data cook until you hit a 95% confidence level.

#What if My Test Results Are Inconclusive?

It happens more often than you’d think. Sometimes a test just ends in a draw, with no clear winner. But that’s not a failure! It’s actually a valuable piece of information: you’ve learned that your proposed change didn’t have a real impact, good or bad.

When a test is inconclusive, don’t just throw it out. Dive into the secondary metrics. Maybe your click-through rate went up, but impressions dropped? Or perhaps rankings improved, but you didn’t get any more clicks? These little clues are gold for crafting your next hypothesis. An inconclusive result is still data, and it helps you get smarter for the next test.

Ready to stop guessing and start measuring? Rankdigger taps directly into your Google Search Console data to uncover your biggest opportunities for growth. Our platform helps you identify high-potential pages and keywords, giving you the data you need to build powerful, effective SEO A/B tests. Discover your next winning strategy at rankdigger.com.